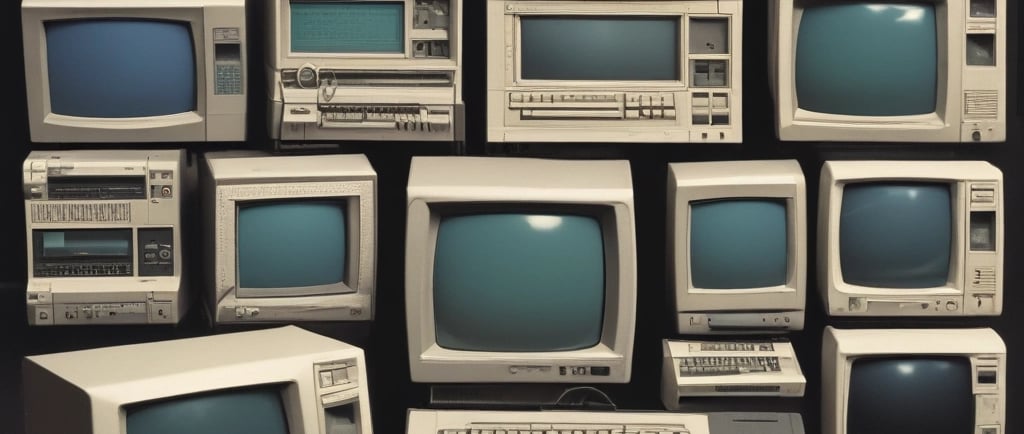

Evolution of the Computer

Manoj Agarwal

12/25/20243 min read

The evolution of the computer has been a gradual process that spans thousands of years, from the earliest counting devices such as the abacus to the modern-day personal computer. The development of the computer has been driven by the need to perform complex calculations and process large amounts of data quickly and efficiently.

One of the earliest known counting devices is the abacus, which was used by ancient civilizations such as the Babylonians, Egyptians, and Chinese. The abacus was a simple but effective tool that was used to perform basic arithmetic operations, such as addition, subtraction, and multiplication. The abacus was widely used until the invention of the mechanical calculator in the 17th century.

The mechanical calculator was a significant advancement in the history of computing. The first mechanical calculator was invented by Wilhelm Schickard in 1623, but it was not widely used. The first practical mechanical calculator was developed by Blaise Pascal in 1642, and it was called the Pascaline. The Pascaline was a hand-cranked device that could perform addition and subtraction, but it was not very reliable and was expensive to produce.

In the 19th century, Charles Babbage proposed the development of a mechanical device that could perform mathematical calculations automatically. He designed the Analytical Engine, which was a general-purpose mechanical computer that could be programmed to perform a wide range of mathematical operations. Although Babbage never completed the Analytical Engine, it is considered to be the first design for a general-purpose computer.

The first electronic computer was the Electronic Numerical Integrator And Computer (ENIAC), which was developed during World War II. The ENIAC was a massive machine that filled a large room and was used to calculate ballistic trajectories. The ENIAC was a major breakthrough in the history of computing, but it was not a general-purpose computer and was not practical for everyday use.

The first general-purpose electronic computer was the UNIVAC I, which was developed in the early 1950s. The UNIVAC I was a significant advancement in the history of computing, as it was the first computer that could be used for a wide range of applications. The UNIVAC I was also the first computer to be used for business applications, such as accounting and inventory management.

In the 1960s, IBM developed the System/360, which was a family of mainframe computers that could be used for a wide range of applications. The System/360 was a significant advancement in the history of computing, as it was the first computer that could be used by small and large businesses alike. This helped to spur the growth of the computer industry and paved the way for the development of other types of computers.

The development of the microprocessor in the 1970s was a major turning point in the history of computing. The microprocessor is a small chip that contains all the components of a computer's central processing unit (CPU). The invention of the microprocessor made it possible to create smaller and more affordable computers, which led to the development of the personal computer (PC).

The first PC, the Altair 8800, was developed in 1975 by Ed Roberts. The Altair 8800 was a simple machine that used a microprocessor and could be used to run basic programs. The development of the PC was a major breakthrough in the history of computing, as it made computers accessible to individuals and small businesses.

The development of the internet in the 1980s and the World Wide Web in the 1990s had a profound impact on the computer industry. The internet and the World Wide Web made it possible for individuals and organizations to communicate and share information easily and quickly. This led to the development of new technologies, such as email, online shopping, and social media, which have become an integral part of our lives today.

In recent years, there has been a rapid development of new technologies such as artificial intelligence, cloud computing, and the Internet of Things. These technologies are driving the development of more powerful and capable computers, and are expected to have a significant impact on various industries and aspects of our lives.